POSITION TRACKING WIRELESS NAMETAGS

Question…

Sight vs Sound : A system built to study viewer immersion in a gallery space to augment and enhance the sonic soundscape of each scene displayed depending on their physical positioning and orientation in the space.

This is a proof of concept system for potential pop-ups, gallery installations, retail stores and much more

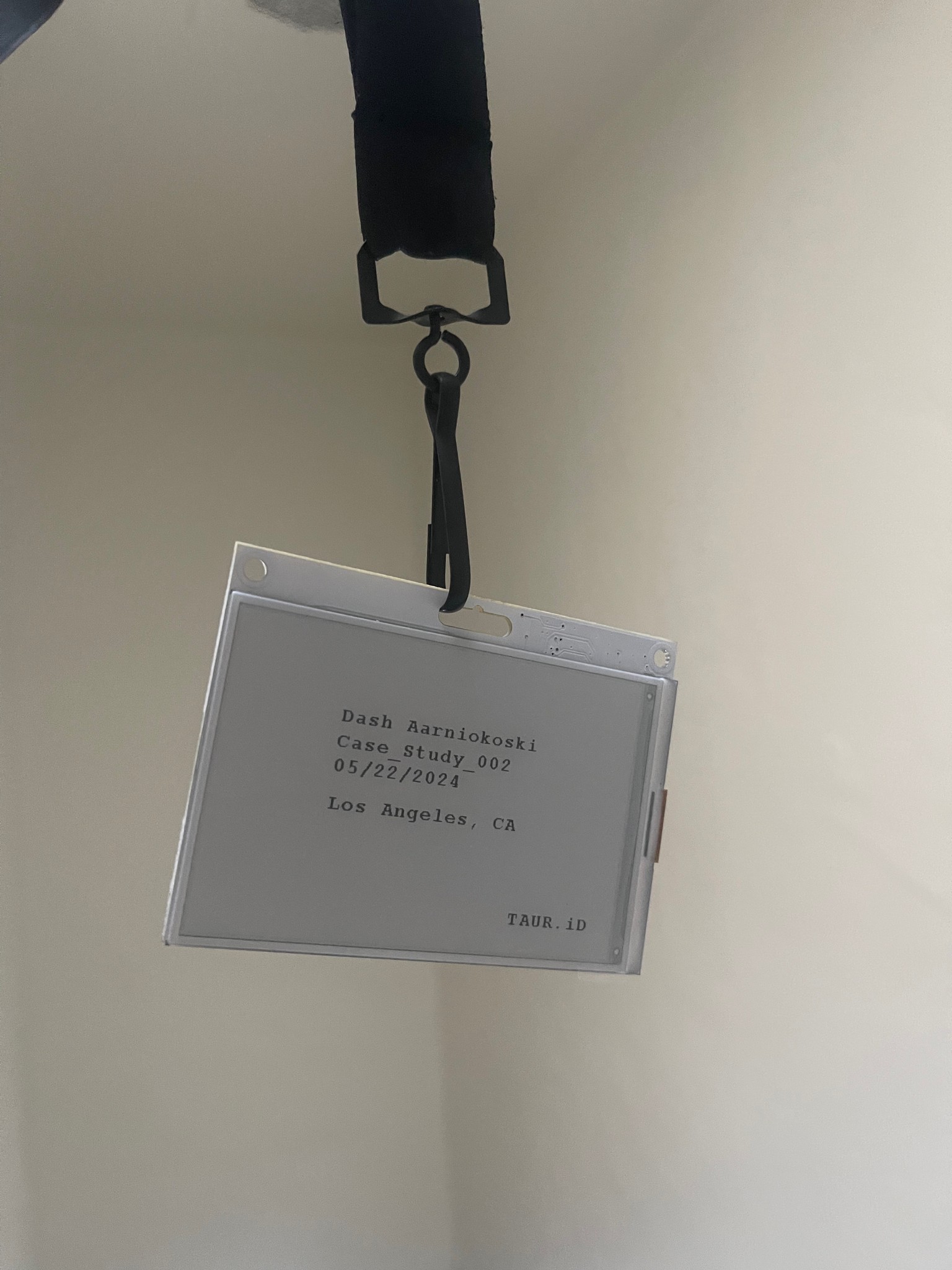

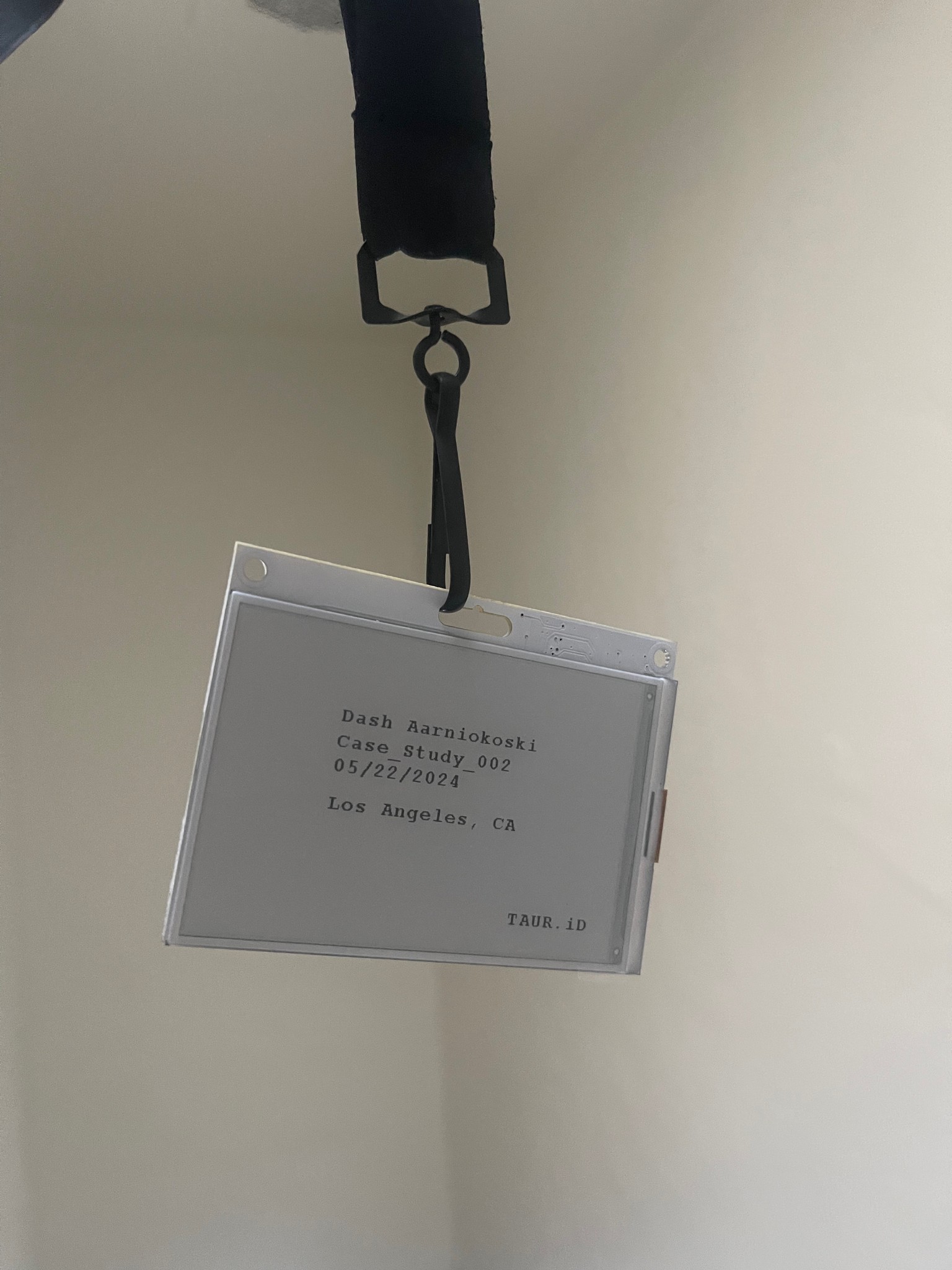

The system works by providing the guests as they enter with a customized e-ink name tag on a lanyard, programmed via sound waves.

The name tag acts as both an admission ticket into the gallery and a positional tracker. As the guest walks around the room the name tag will communicate with the speakers to change the output of each scene's sonic soundscape depending on the viewers proximity and orientation to the scenes.

As they get closer, the sound gets louder. As they get further away, it gets quieter. If they look at it, it gets more prominent. If they turn away, it fades into the background.

The visuals are hosted on 4 displays at a time, selected randomly from a bank of dozens of potential scenes.

All 60 seconds long so they cycle in unison.

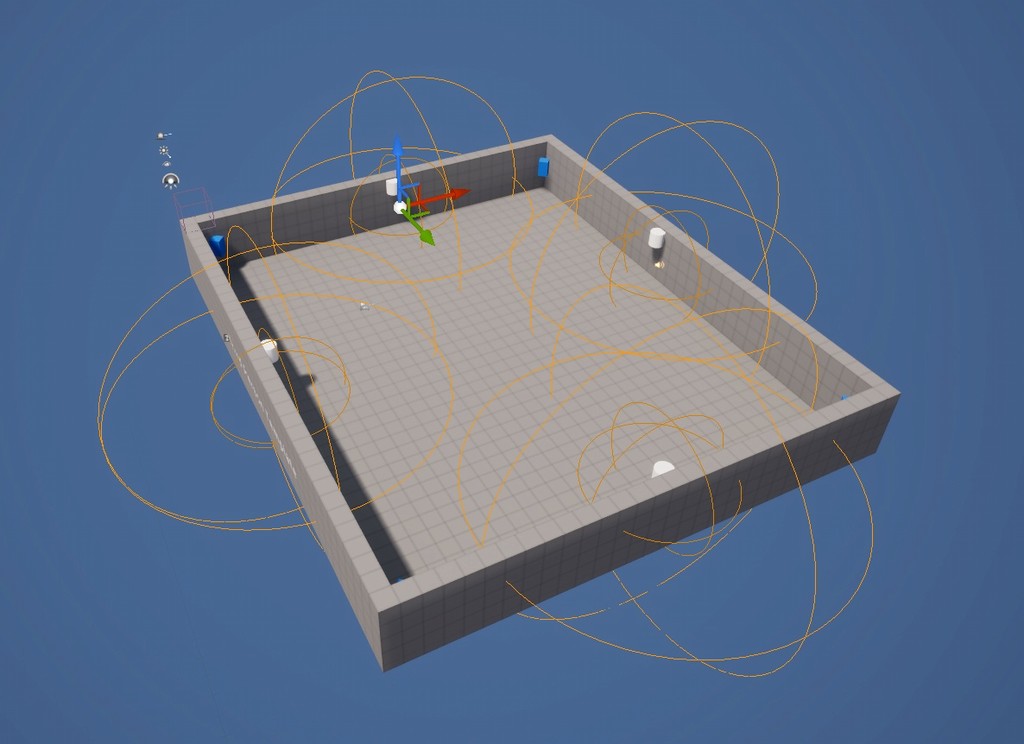

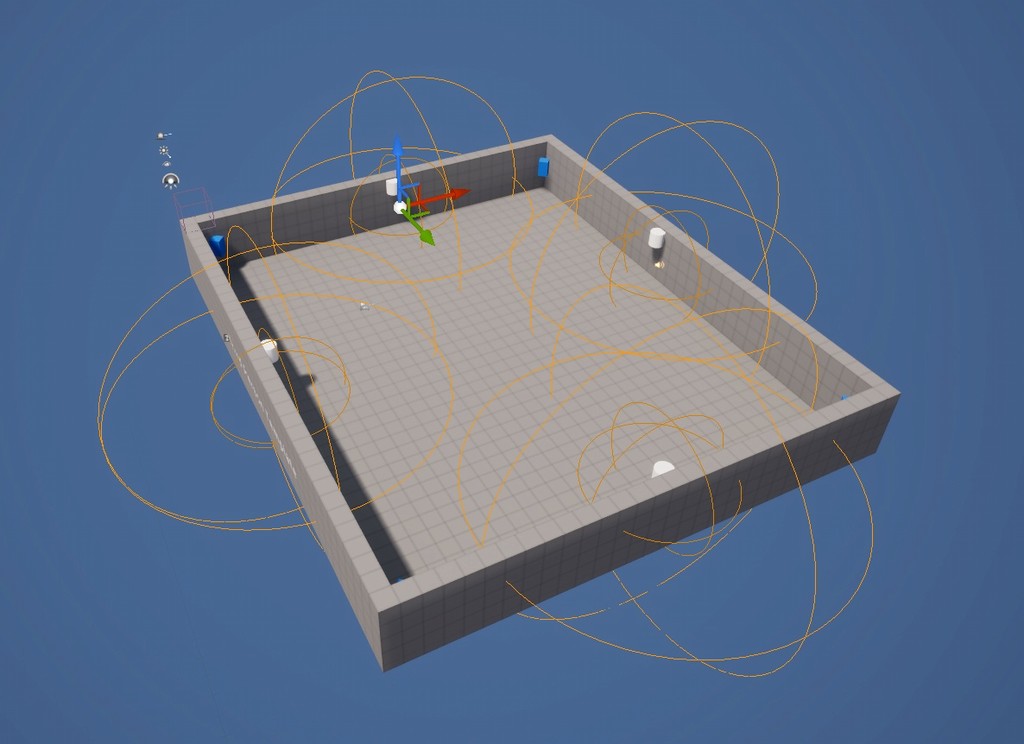

All the audio is pumped into the room from 4 speakers arranged in each of the corners of the room.

The goal being to determine if subtle viewer augmentation with the sonics in a space creates a more engaging experience compared to just having visuals with no audio.

The system was built in unreal engine and touch designer.

Does the introduction of viewer

imposed audio augmentation in a gallery environment

create a more immersive

experience than with visuals alone?